MHISystems Overview

AI-Powered Mental Health Diagnostic Platform

About the Project

MHISystems is an AI-powered mental health diagnostic platform implemented by Loudon Psychological Services. Founded by doctors and psychologists, MHISystems focuses on streamlining the healthcare process by simplifying report generation, patient diagnostics, and implementing AI to evaluate and enhance patient care. During my internship, I worked directly with psychologists, addressing their needs and developing software solutions at the forefront of leading AI technologies.

Technologies

Frontend Framework

Vue.js with JavaScript for responsive, interactive user interfaces

Backend Development

C# .NET framework for secure server-side processing

Database Management

SQL Server for patient and provider data storage and retrieval

AI & Machine Learning

Python-based Large Language Models (LLMs) resource input and tailored responses

My Contributions

Frontend Development with Vue.js

• Table interface for reports

• AI chat interface with Kendo UI

• Template table for diagnoses

• Report PDF downloading

LLM Response Generation with Python

• Python backend resource intake

• Generate report functions

• Debugging with comments

• Real-time response display in the AI chat interface

Database Architecture in SQL Server Management Studio

• Constructed SQL tables to store patient reports, Documents, and AI chat interaction responses

My System Screenshots

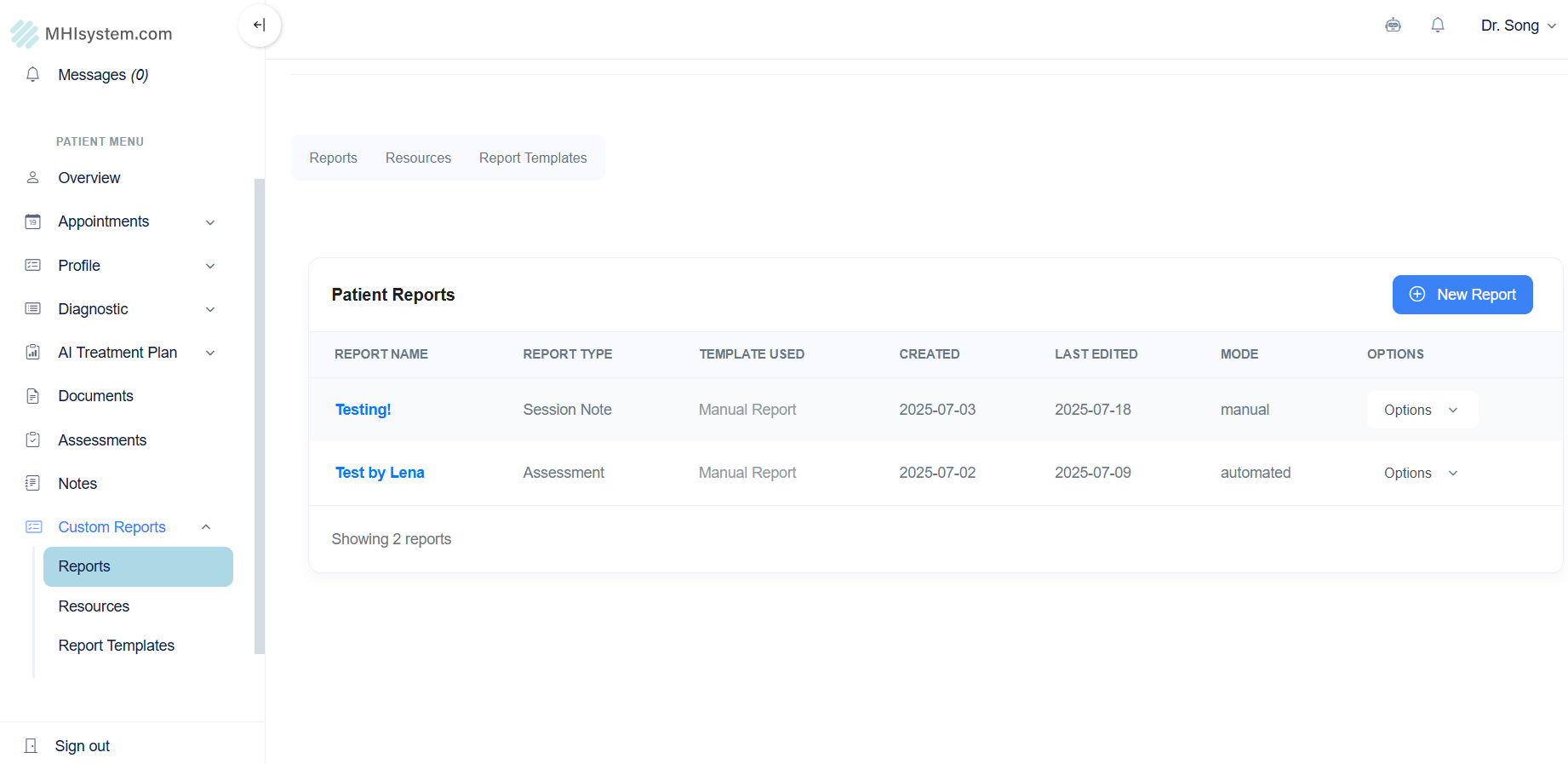

Patient Report Interface

Main dashboard showing projects that doctors create for their patients. Saves report generations and AI conversations.

.png)

AI Report Templates

List of templates doctors create and write to send to LLM when generating a diagnostic report.

.png)

Template Editor

LLM prompt being sent to AI API, different for each assessment (ie: ADHD, ASD, OCD, etc).

.png)

Chat Interaction Interface

A system to have a conversation with AI about documents, files, and templates; AI helps write a report given that data.

.png)

Template Selection

Provider now chooses the template prompt to send to LLM based on which report they are writing.

.png)

Python Debugging Console

Provider sends template, documents, and assessments to backend. All that data is shown in the console here.

.png)

AI Interaction

User prompts AI, data sent to LLM, response generated, report is now pasted into the text editor for provider to change/save.

.png)

PDF Export

Once the provider is done writing and editing the report, they can export the text as a PDF to send to the patient.

Development Challenges & Solutions

Challenge: Database Workflow Organization

Solution: I presented a streamlined project architecture design, combining Vue.js, .NET, Flask, SSMS, and an additional Python LLM integration capability. All prior patient and provider data was stored in SQL Server Management studio. After discussing the architecture of the project, we decided to maintain the current database structure, storing additional documents, pdfs, and chat interaction histories in SQL. The team had considered using Microsoft Azure Cosmo DB to vectorize document uploads for AI chat interaction, but on top of everything else, including the new LLM feature I was in charge of creating, adding a new database to the existing project would have been too inefficient and complex. The idea was to keep things simple, and it worked.

Challenge: LLM Diagnostic Accuracy

Solution: A large part of creating this project boiled down to hours of debugging (surprise!). In the "Python Debugging Console" screenshot above, you can see all the data being sent to the LLM for AI response generation. However, for a while, no data was being passed to the LLM. The responses it generated were static, meaning it was creating outputs based on pre-written functions that had prompts within them. I fixed this issue by passing all user-uploaded resources from C# (including the provider templates) to a function in python called generate_llm_response(prompt). I combined all resources into a string and passed the data to the function via the variable prompt, and Voilà. Rather than taking in static prompts that were pre-written, all the correct data passed into the generate_llm_response function (including the template which is basically the user-selected prompt), and the output became a proper diagnosis report.